Memory Makers on Track to Double HBM Output in 2023

by Anton Shilov on August 9, 2023 3:00 PM EST

TrendForce projects a remarkable 105% increase in annual bit shipments of high-bandwidth memory (HBM) this year. This boost comes in response to soaring demands from AI and high-performance computing processor developers, notably Nvidia, and cloud service providers (CSPs). To fulfill demand, Micron, Samsung, and SK Hynix are reportedly increasing their HBM capacities, but new production lines will likely start operations only in Q2 2022.

More HBM Is Needed

Memory makers managed to more or less match the supply and demand of HBM in 2022, a rare occurrence in the market of DRAM. However, an unprecedented demand spike for AI servers in 2023 forced developers of appropriate processors (most notably Nvidia) and CSPs to place additional orders for HBM2E and HBM3 memory. This made DRAM makers use all of their available capacity and start placing orders for additional tools to expand their HBM production lines to meet the demand for HBM2E, HBM3, and HBM3E memory in the future.

However, meeting this HBM demand is not something straightforward. In addition to making more DRAM devices in their cleanrooms, DRAM manufacturers need to assemble these memory devices into intricate 8-Hi or 12-Hi stacks, and here they seem to have a bottleneck since they do not have enough TSV production tools, according to TrendForce. To produce enough HBM2, HBM2E, and HBM3 memory, leading DRAM producers have to procure new equipment, which takes 9 to 12 months to be made and installed into their fabs. As a result, a substantial hike in HBM production is anticipated around Q2 2024, the analysts claim.

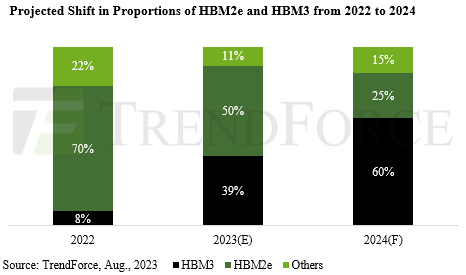

A noteworthy trend pinpointed by TrendForce analysts is the shifting preference from HBM2e (Used by AMD's Instinct MI210/MI250/MI250X, Intel's Sapphire Rapids HBM and Ponte Vecchio, and Nvidia's H100/H800 cards) to HBM3 (incorporated in Nvidia's H100 SXM and GH200 supercomputer platform and AMD's forthcoming Instinct MI300-series APUs and GPUs). TrendForce believes that HBM3 will account for 50% of all HBM memory shipped in 2023, whereas HBM2E will account for 39%. In 2024, HBM3 is poised to account for 60% of all HBM shipments. This growing demand, when combined with its higher price point, promises to boost HBM revenue in the near future.

Just yesterday, Nvidia launched a new version of its GH200 Grace Hopper platform for AI and HPC that uses HBM3E memory instead of HBM3. The new platform consisting of a 72-core Grace CPU and GH100 compute GPU, boasts higher memory bandwidth for the GPU, and it carries 144 GB of HBM3E memory, up from 96 GB of HBM3 in the case of the original GH200. Considering the immense demand for Nvidia's offerings for AI, Micron — which will be the only supplier of HBM3E in 1H 2024 — stands a high chance to benefit significantly from the freshly released hardware that HBM3E powers.

HBM Is Getting Cheaper, Kind Of

TrendForce also noted a consistent decline in HBM product ASPs each year. To invigorate interest and offset decreasing demand for older HBM models, prices for HBM2e and HBM2 are set to drop in 2023, according to the market tracking firm. With 2024 pricing still undecided, further reductions for HBM2 and HBM2e are expected due to increased HBM production and manufacturers' growth aspirations.

In contrast, HBM3 prices are predicted to remain stable, perhaps because, at present, it is exclusively available from SK Hynix, and it will take some time for Samsung to catch up. Given its higher price compared to HBM2e and HBM2, HBM3 could push HBM revenue to an impressive $8.9 billion by 2024, marking a 127% YoY increase, according to TrendForce.

SK Hynix Leading the Pack

SK Hynix commanded 50% of the HBM memory market in 2022, followed by Samsung with 40% and Micron with a 10% share. Between 2023 and 2024, Samsung and SK Hynix will continue to dominate the market, holding nearly identical stakes that sum up to about 95%, TrendForce projects. On the other hand, Micron's market share is expected to hover between 3% and 6%.

Meanwhile, (for now) SK Hynix seems to have an edge over its rivals. SK Hynix is the primary producer of HBM3, the only company to supply memory for Nvidia's H100 and GH200 products. In comparison, Samsung predominantly manufactures HBM2E, catering to other chip makers and CSPs, and is gearing up to start making HBM3. Micron, which does not have HBM3 in the roadmap, produces HBM2E (which Intel reportedly uses for its Sapphire Rapids HBM CPU) and is getting ready to ramp up production of HBM3E in 1H 2024, which will give it a significant competitive advantage over its rivals that are expected to start making HBM3E only in 2H 2024.

Source: TrendForce

9 Comments

View All Comments

Sivar - Wednesday, August 9, 2023 - link

"but new production lines will likely start operations only in Q2 2022"Date typo? Reply

PeachNCream - Wednesday, August 9, 2023 - link

It's Anton, so yes, there are typos ;) ReplyRudde - Thursday, August 10, 2023 - link

Reading further, the date is specified as Q2 2024. Replynandnandnand - Thursday, August 10, 2023 - link

Pray for overproduction, AI bubble bursts, prices drop, and consumer products can include HBM. Ha ha. ReplyLiKenun - Thursday, August 10, 2023 - link

There was an interesting take from an academic who said AI could train using compressed data, which was not only necessary because of finite resources, but much more efficient with minimal loss in the end result.The bubble could burst for memory, storage, and high bandwidth networking if this proves true and the industry/tech stack moves in that direction. Reply

nandnandnand - Thursday, August 10, 2023 - link

If by compressed data, you mean the research being done to prune datasets and optimize model sizes. Ideally, model sizes can drop dramatically, e.g. 90%, with imperceptible quality loss. Possibly even an improvement if junk data is taken out. Replybrucethemoose - Friday, August 11, 2023 - link

The training RAM bottleneck isn't really the data itself, but the model weights and the training process.There are lots of experiments with quantization, pruning, backwards pass free training, data augmentation/filtering and such, but at the end of the day you *have* to pass through billions of parameters for each training/inference pass. Reply

Diogene7 - Friday, August 11, 2023 - link

Would it be possible to get an idea/range of the Average Selling Price (ASP) for 16GB and 24GB HBM2E / HBM3, and to compare it to SK Hynix 24GB LPPDR5X for example ?It is to get an idea / order of magnitude of how much more expensive for same capacity HBM memory is versus LPDDR5 memory… Reply

phoenix_rizzen - Tuesday, August 29, 2023 - link

You've got your labels backwards in this paragraph, compared to the graphic displayed right after it:"TrendForce believes that HBM3 will account for 50% of all HBM memory shipped in 2023, whereas HBM2E will account for 39%. In 2024, HBM3 is poised to account for 60% of all HBM shipments. This growing demand, when combined with its higher price point, promises to boost HBM revenue in the near future."

Should be HBM3 at 39% (black) and HBM2e at 50% (dark green) in 2023. Reply